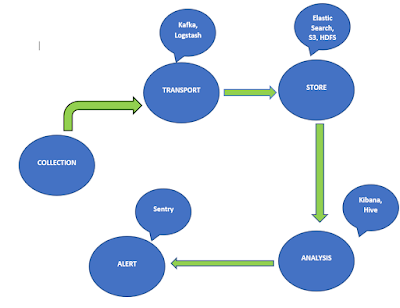

Create a Central Logging System

Logging is the most important part of any system. They give you insights about the application, what kind of errors are occurring and what components are causing the errors and also about how the application is reacting and working when something wrong happens. The usual practice is almost every application write to logs either in the file system or in Database. If your application is running on multiple hosts then designing a central logging system becomes crucial since you can collect, aggregate and maintain logs at one centralized location and do operations on top of them.

There are many tools available to which can solve some part of the problem but we need to build a robust application using all these tools.

Collection

Imagine you have a web application running on the server and if something goes down, your developers or operations team need to access log data quickly in order to troubleshoot live issues, you would need a solution which can monitor the changes in the log files in almost real-time. Hence you would need to replicate the logs.

Transport

If you have multiple hosts running then logs data can accumulate quickly. There should be an efficient and reliable way to transport this data to the centralized application and ensure data is not lost. There are many frameworks available to transport log data. One way is directly plug input sources and framework can start collecting logs and another way is to send log data via API, application code is written to log directly to these sources it reduces latency and improves reliability.

If you want to provide a number of input sources you can use Logstash — Open Source Log collector, written in Ruby.

Storage

Now we have transport in place, logs will need a destination, a storage where all the log data will be saved. The system should be highly scalable as the data will keep on growing and it should be able to handle the growth over time. Logs data will depend on the how huge your applications are if your application is running on multiple servers or in many containers it will generate more logs. ElasticSearch or HDFS is a good choice for interactive data analysis and working with raw data more effectively.

Analysis

Logs are meant for analysis and analytics. Once your logs are stored in a centralized location, you need a way to analyze them. There are many tools available for log analysis, if you need a UI for analysis, you can parse all the data in ElasticSearch and use Kibana or Greylog2 to query and inspect the data. Grafana and Kibana can be used to show real-time data analytics.

Alerting

It’s nice to have an alerting system which will alert us to any change in the log patterns or calculated metrics. Logs are very useful for troubleshooting errors. It’s far better to have some alerting build in the logging application system which will send an email or notify us then to have someone keep watching logs for any changes. There are many error reporting tools available, you can use Sentry or Honeybadger.

Thanks for dropping by!! Feel free to comment on this post or you can drop me an email at naik899@gmail.com

Comments

Post a Comment